|

by Linda Kinstler has introduced tools and processes to help employees and clients "stretch their moral imagination."

Photo:

Alex Zyuzikov/Getty Images

She arrived at Salesforce just over a year ago to become its first-ever Chief Ethical and Humane Use Officer, taking on an unprecedented and decidedly ambiguous title that was created specifically for her unprecedented, ambiguous, yet highly specific job:

I ask her how those norms are decided in the first place.

In the wake of the Cambridge Analytica scandal, employee walkouts, and other political and privacy incidents, tech companies faced a wave of calls to hire what researchers at the Data & Society Research Institute call "ethics owners," people responsible for operationalizing,

...in practical and demonstrable ways.

Salesforce hired Goldman away from the Omidyar Network as the culmination of a seven-month crisis-management process that came after Salesforce employees protested the company's involvement in the Trump administration's immigration work.

Other companies, responding to their own respective crises and concerns, have hired a small cadre of similar professionals - philosophers, policy experts, linguists and artists - all to make sure that when they promise not to be evil, they actually have a coherent idea of what that entails.

So then what happened?

While some tech firms have taken concrete steps to insert ethical thinking into their processes, Catherine Miller, interim CEO of the ethical consultancy Doteveryone, says there's also been a lot of "flapping round" the subject.

Critics dismiss it as "ethics-washing," the practice of merely kowtowing in the direction of moral values in order to stave off government regulation and media criticism.

The term belongs to the growing lexicon around technology ethics, or "tethics," an abbreviation that began as satire on the TV show "Silicon Valley," but has since crossed over into occasionally earnest usage.

Google, infamously, created an AI Council and then, in April of last year, disbanded it after employees protested the inclusion of an anti-LGBTQ advocate.

Today, Google's approach to ethics includes the use of "Model Cards" that aim to explain its AI.

The company has made more-substantial efforts:

More than 100 Google employees have attended ethics trainings developed at the Markkula center.

The company also developed a fairness module as part of its Machine Learning Crash Course, and updates its list of "responsible AI practices" quarterly.

...

The Markkula center, where Vallor works, is named after Mike Markkula Jr., the "unknown" Apple co-founder who, in 1986, gave the center a starting seed grant in the same manner that he gave the young Steve Jobs an initial loan.

He never wanted his name to be on the building - that was a surprise, a token of gratitude, from the university.

Markkula has retreated to living a quiet life, working from his sprawling gated estate in Woodside.

These days, he doesn't have much contact with the company he started,

But when he arrived at the Santa Clara campus for an orientation with his daughter in the mid-'80s, he was Apple's chairman, and he was worried about the way things were going in the Valley.

At Apple, he spent a year drafting the company's "Apple Values" and composed its famous marketing philosophy ("Empathy, Focus, Impute.").

He says that there were many moments, starting out, when he had to make hard ethical choices,

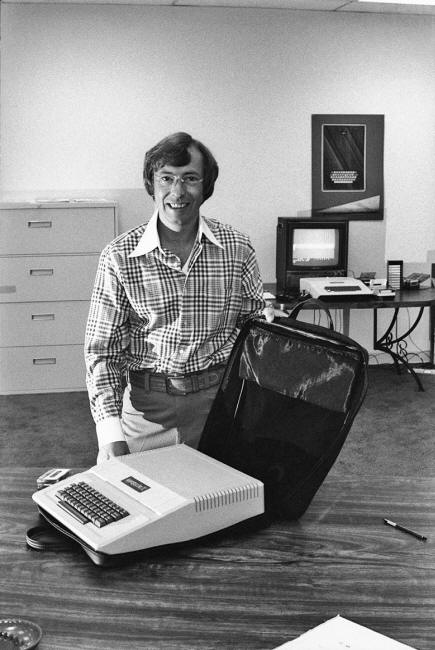

Former Apple CEO Mike Markkula spent a year crafting the company's values statement. Today, the ethics center at Santa Clara University is named for him. Photo: Tom Munnecke/Getty Images

The Markkula Center for Applied Ethics is one of the most prominent voices in tech's ethical awakening.

On its website, it offers a compendium of materials on technology ethics, including, Every one of these tools is an attempt to operationalize the basic tenets of moral philosophy in a way that engineers can quickly understand and implement.

But Don Heider, the Markkula center's executive director, is quick to acknowledge that it's an uphill fight.

Even at Salesforce, practitioners like Yoav Schlesinger, the company's principal of ethical AI Practice, worry about imposing an "ethics tax" on their teams - an ethical requirement that might call for "heavy lifting" and would slow down their process.

Under Goldman's direction, the company has rolled out a set of tools and processes to help Salesforce employees and its clients,

The company offers an educational module that trains developers in how to build "trusted AI" and holds employee focus groups on ethical questions.

Goldman agrees:

The company has also created explainability features, confidential hotlines, and protected fields that warn Salesforce clients that things like ZIP code data is highly correlated with race.

They have refined their acceptable use policy to prevent their e-commerce platform from being used to sell a wide variety of firearms and to prevent their AI from being used to make the final call in legal decision-making.

The Ethical and Humane Use team holds office hours where employees can drop by to ask questions.

They have also begun to make their teams participate in an exercise called "consequence scanning," developed by researchers at Doteveryone.

Teams are asked to answer three questions:

The whole process is designed to fit into Agile software development, to be as minimally intrusive as possible.

Like most ethical interventions currently in use, it's not really supposed to slow things down, or change how business operates.

Beware the "ethics tax."

Engineers, he says,

And therein lies the problem.

These approaches, while well-intentioned and potentially impactful, tend to suggest that ethics is something that can be quantified, that living a more ethical life is merely a matter of sitting through the right number of trainings and exercises.

With colleagues Danah Boyd and Emmanuel Moss, Metcalf recently surveyed a group of 17 "ethics owners" at different companies.

One engineer told them that people in tech,

An executive told them that market pressures got in the way:

The "ethics owners" they spoke to were all experimenting with different approaches to solving problems, but often tried to push for simple, practical solutions adopted from other fields, like checklists and educational modules.

If and when ethics does "arrive" at a company, it often does so quietly, and ideally invisibly.

In a recent survey of UK tech workers, Miller and her team found that 28% had seen decisions made about a technology that they believed would have a negative effect upon people or society.

Among them, one in five went on to leave their companies as a result.

...

At Enigma, a small business data and intelligence startup in New York City, all new hires must gather for a series of talks with Michael Brent, the philosophy professor working as the company's first data ethics officer.

At these gatherings, Brent opens his slides and says,

The idea is that starting at the beginning is the only way to figure out the way forward, to come up with new answers.

The engineers he works with - "25-year-olds, fresh out of grad school, they're young, they're fucking brilliant" - inevitably ask him whether all this talk about morals and ethics isn't just subjective, in the eye of the beholder.

They come to his office and ask him to explain.

Brent met Enigma's founders, Marc DaCosta and Hicham Oudghiri, in a philosophy class at Columbia when he was studying for his doctorate.

They became fast friends, and the founders later invited him to apply to join their company. Soon after he came on board, a data scientist at Enigma called him over to look at his screen.

It was a list of names of individuals and their personal data.

The engineer hadn't realized that he would be able to access identifying information.

The fact that Brent, and many others like him, are even in the room to ask those questions is a meaningful shift.

Trained philosophers are consulting with companies and co-authoring reports on what it means to act ethically while building unpredictable technology in a world full of unknowns.

Another humanities Ph.D. who works at a big tech company tells me that the interventions he ends up making on his team often involve having his colleagues simply do less of what they're doing, and articulating ideas in a sharper, more precise manner.

It's the Wild West, and anyone who wants to say they are ethicists can just say it. It's nonsense... Reid Blackman

But for every professional entering the field, there are just as many - and probably more - players whom Reid Blackman, a philosophy professor turned ethics consultant, calls "enthusiastic amateurs."

The result is that to wade into this field is to encounter a veritable tower of Babel.

A recent study of 84 AI ethics guidelines from around the world found that,

This is also, in part, a geopolitical problem:

I asked her and Schlesinger what would happen if a Salesforce client decided to ignore all of the ethical warnings they had worked into the system and use data that might lead to biased results.

Goldman paused... The thing is, ethics at this point is still something you can opt out of.

Schlesinger explains that Salesforce's system is right now designed to give the customer,

Likewise, at Enigma, the company's co-founders and leadership team can choose not to listen to Brent's suggestions.

|