|

Universidade de São Paulo from FranciscoRodrigues Website

The blind giant Orion carrying his servant Cedalion on his shoulders to act as his eyes.

Illustration in a

medieval manuscript.

The evolution of knowledge and the myth of the lone genius...

With this phrase, Newton recognized that his discoveries were the result of continuing and improving on the knowledge accumulated by previous generations.

The truth is that every scientific and intellectual advance is the result of a collective process in which each new idea builds on what has already been discovered.

Discoveries or new ideas don't come out of

nowhere: they are built up over time, with the combined efforts of

many thinkers who contribute, even anonymously, to the progress of

knowledge.

Although Einstein, Darwin and

Pasteur developed new areas of knowledge in impressive ways,

these achievements were not the result of flashes of individual

genius, but of the work of thousands of previous researchers.

hesitates to forget its founders is lost."

Alfred

Whitehead.

Newton was right.

Galileo had observed the moons of Jupiter, showing that not all the celestial bodies orbited the Earth, and formulated the principle of inertia, according to which a body tends to remain at rest or in uniform rectilinear motion in the absence of external forces - this was Newton's first law.

Kepler, in turn, based on the data

meticulously collected by Tycho Brahe, formulated the three

laws of planetary motion, showing that the planets describe

elliptical orbits around the Sun. In addition, a vast collection of

astronomical observations and natural phenomena, such as tides and

eclipses, was already available.

Copernicus was probably familiar with the writings of Aristarch of Samos (c. 310–230 BC), who had anticipated this heliocentric idea.

In other words,

An important point to make here is that when we learn the laws of physics at school, we have no idea that Newton knew of the earlier work of Galileo and Kepler.

We learn Newton's laws as if they came from a flash of inspiration, creating the myth of the lone genius.

This way of teaching gives us the impression that discoveries were made individually, which celebrates the importance and genius of specific individuals, but overshadows the contribution of a large number of scientists.

It does not allow us to see knowledge as a whole, but rather as disconnected parts, indicating only the specific advances in short periods of time, going back only one generation at a time.

In fact, we should learn that Galileo and Kepler exchanged ideas and correspondence.

Their books were read by Newton, who also had

access to ancient Greek manuscripts and medieval texts. In other

words, Newton had a wealth of prior knowledge at his disposal.

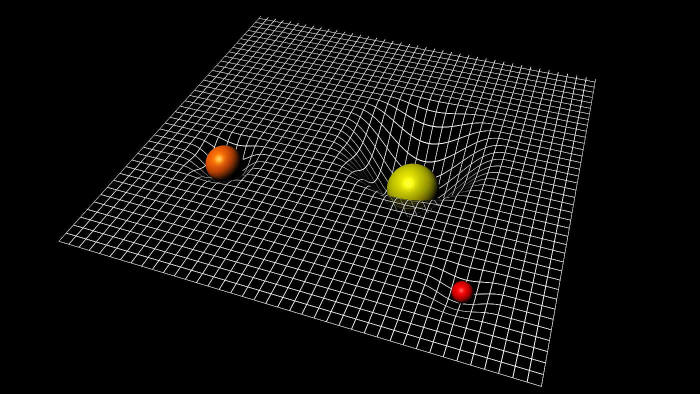

In fact, Einstein's ideas were also based on earlier contributions, such as those of the mathematician Hendrik Antoon Lorentz, who formulated the transformation that bears his name.

These transformations explained phenomena such as the dilation of time and the contraction of space, which Einstein later reinterpreted as natural consequences of the structure of space-time.

Another influence came from the Austrian physicist and philosopher Ernst Mach, known for his criticism of Newtonian physics' concepts of absolute space and time.

The so-called Mach principle proposes that the inertial forces experienced by a body, such as the tendency to remain at rest or in motion, result from interaction with the total mass of the universe, rather than from properties inherent in empty space.

This idea directly influenced Einstein in his search for a deeper understanding of inertia, and was one of the starting points for formulating the theory of general relativity.

These influences helped him to develop his vision of an interconnected space-time in which gravity arises from the curvature of this four-dimensional fabric.

Even in the case of the photoelectric effect, for which Einstein won the Nobel Prize, he used Max Planck's earlier ideas.

This is yet another example of how great scientific discoveries do not happen in isolation.

gravity is not a force acting between massive bodies, as proposed in Isaac Newton's theory of universal gravitation. Instead, general relativity describes gravity as a manifestation of the curvature of spacetime, shaped by the presence of mass and energy.

In ancient Greece, Anaximander of Miletus suggested that humans had evolved from other species when he reflected on the fragility of children at birth.

According to him, humans are extremely dependent in the first few years of life and would not be able to survive on their own without prolonged care. This led him to conclude that the first humans could not have arisen in their present form because they would not have survived.

He therefore suggested that humans must have evolved from animals of another species, possibly aquatic, whose initial stage of life was less vulnerable, allowing them to survive and then evolve.

The idea that living things change over time has

therefore been discussed since ancient Greece.

(and of animals too), those who have learned to co-operate and to improvise have prevailed."

Charles Darwin.

If we left leftovers in a place with a lot of rubbish, rats would be created. Similarly, spoiled meat would produce flies. Although this idea seems absurd today, it prevailed for over two thousand years and was only challenged by Francesco Redi in the 17th century.

Redi carried out controlled experiments with meat in closed and open jars and showed that fly larvae only appeared when the adult insects had access to food.

His studies marked the beginning of the decline of the theory of spontaneous generation, although it was not definitively refuted until the 19th century by Louis Pasteur, who showed that micro-organisms do not arise spontaneously either, but come from other micro-organisms in the environment.

In his famous experiments with swan-neck flasks, he showed that even when a culture broth was boiled - eliminating all micro-organisms - no living creatures emerged as long as the flask remained closed and the air didn't bring in new contaminants. When the neck of the flask was broken, allowing air particles to enter, the micro-organisms reappeared.

Pasteur thus proved that the origin of life observed in culture media came from germs already present in the environment, putting an end to the idea of spontaneous generation and paving the way for modern microbiology.

With these advances, Darwin had the foundation he

needed to explain the origin of species. He was standing on the

shoulders of giants.

According to this theory, the universe was made up of four basic elements: earth, water, air and fire.

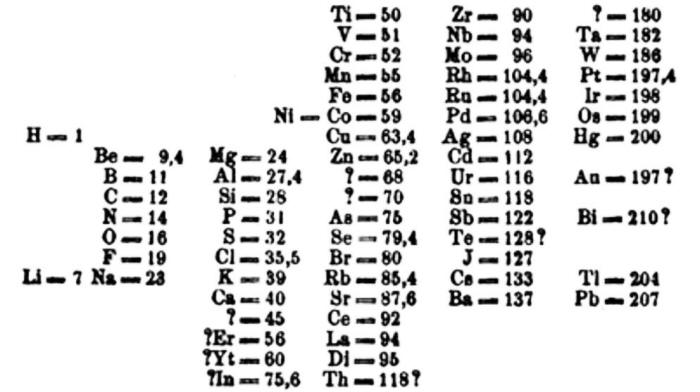

Based on experiments, Robert Boyle was the first to question this view, suggesting that the true elements were substances that could not be broken down into simpler ones - a precursor to the modern definition of a chemical element.

For example,

Boyle's work paved the way for Joseph

Priestley to discover oxygen, John Dalton to propose a

way of assigning relative masses to the chemical elements, and

Antoine Lavoisier to identify 33 elements and formulate the law

of conservation of mass, according to which, in a closed system, the

total mass of the reactants is equal to the total mass of the

products.

fire, air, water and earth are the true principles of things…"

Robert Boyle.

In other words, just as the elements were organized into a coherent structure, like a solitaire game in which each card must be in the right place, scientific progress can be seen as an orderly sequence in which each advance builds on previous discoveries.

In this way, chemistry also developed from the

contributions of a number of scientists, most of them unknown.

In Mendeleev's periodic table, the elements are organized

as in the solitaire

game.

Beginning with Thales of Miletus, considered the first philosopher to break with mythical thinking that attributed natural phenomena to the actions of the gods, we follow a line of philosophical debate and argument that goes through Anaximander, refuted by Anaximenes, and so on, to Socrates and Plato.

The pre-Socratic philosophers tried to explain the world through reason, without recourse to mythical influences. In this way, they proposed that the universe arose from a fundamental principle or element, called arche, rather than from the actions of the gods as told in stories of mythical and magical events.

After them, Socrates and Plato shifted the focus of philosophy to man, ethics and knowledge.

Philosophy was thus born of unknown thinkers who

challenged traditional beliefs. Plato would take this thinking

further and create the first school of thought, the

Academy of Athens.

In other words,

As Hegel summarized,

For Hegel, this process is the driving force behind the development of ideas, history and reality itself, working like a spiral movement in which each synthesis becomes a new thesis that drives progress.

In other words,

"All Western philosophy is nothing but footnotes to the pages of Plato."

Alfred North Whitehead.

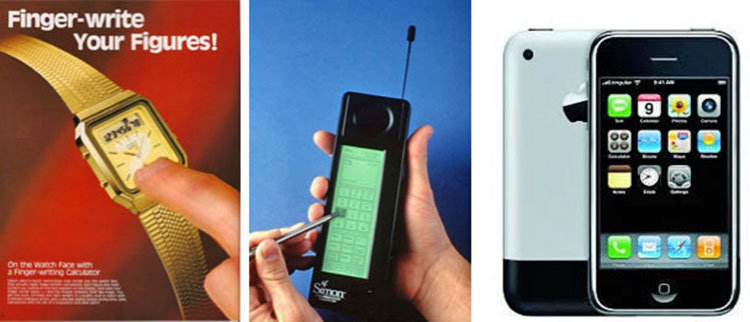

On 9 January 2007, Steve Jobs took to the stage at MacWorld to announce the iPhone, a device that at first glance seemed like an isolated act of genius out of nowhere.

But while innovative, these features were the

result of earlier advances, many of which went unnoticed or failed

to achieve much commercial success.

In 1994, IBM created the Simon Personal Communicator, considered to be the first smartphone in history. It could send e-mails, displayed the world time, offered a notepad, a calendar and even predictive typing.

However, its battery lasted only a few hours, which limited its adoption and prevented it from becoming a global success

In other words,

And let's not forget other devices that existed before the iPhone, such as digital cameras, cassette and DVD players, electronic diaries and digital watches.

All of these technological advances have been

condensed into a single 150g device that has radically changed the

way we connect and access information.

The Casio AT-550 and the Simon Personal Communicator

were the iPhone's predecessors.

The Windows operating system was no different.

Bill Gates and Paul Allen founded Microsoft in 1975 as a software company to develop and sell software for personal computers, starting with a BASIC language interpreter for the Altair 8800.

In 1980, IBM was looking for an operating system for the IBM Personal Computer (PC). Microsoft saw an opportunity.

Tim Paterson, a programmer at Seattle Computer Products, had developed QDOS (Quick and Dirty Operating System) in 1980.

This system was then licensed to IBM and

Microsoft became a computer giant.

The concept of multi-window graphical interfaces can be traced back to developments such as the Xerox Alto, a computer developed in the 1970s that featured the modern graphical user interface (GUI) with windows, icons and pointers, long before the introduction of Windows.

Apple also played an important role with the Apple Lisa and later the Macintosh, which popularized the concept of the graphical user interface.

So far from being an isolated act of genius, the invention of Windows was the result of a series of technological advances and innovations by various companies and individuals over the decades.

was one of the

most popular in the 90s.

Let's take the example of Minecraft, the most successful game of all time, with around 200 million copies sold.

Created independently by Markus "Notch"

Persson, a Swedish programmer, its development was based on

previous games such as Infiniminer (a block-mining game

developed by Zachtronics in 2009) and Dwarf Fortress (an open-world

construction and survival game).

In fact, the mining mode was only added after Infiniminer became public domain.

This mining game, in which players dig and build in a randomly generated world, was a major inspiration for Persson, who decided to incorporate the mining and resource gathering mechanics into his own project after observing Infiniminer's gameplay.

Games like Dwarf Fortress also influenced the idea of an open world, allowing for the dynamic and expansive environment that would become one of Minecraft's trademarks.

In 2009, Persson released the alpha version of Minecraft, which quickly became a worldwide success.

In 2014, Microsoft acquired Persson's Mojang for

$2.5 billion. So we see that the Minecraft game was not an isolated

invention or a sudden stroke of genius, but the product of an

ecosystem of previous ideas and innovations, showing how knowledge

and creations can build on previous ideas, each contributing to the

success of the next step.

Minecraft

is the most

successful game of all time.

These forums played a crucial role in the development of Minecraft, providing Persson with a platform to interact with players and other developers, receive suggestions, report problems and implement improvements.

The community's constant feedback was essential to improving the game's mechanics, from adding new features to fixing bugs, and was fundamental to the game's growth in its early stages.

In this way, the entire community helped make the

game the success it is today.

Another more recent example is Large Language Models (LLMs).

Perhaps the most popular is OpenAI's ChatGPT, which, despite what it might seem, didn't come out of nowhere.

is as a reasoning engine, not as a database of facts. They are not memorized, they reason." Sam Altman

CEO of OpenAI.

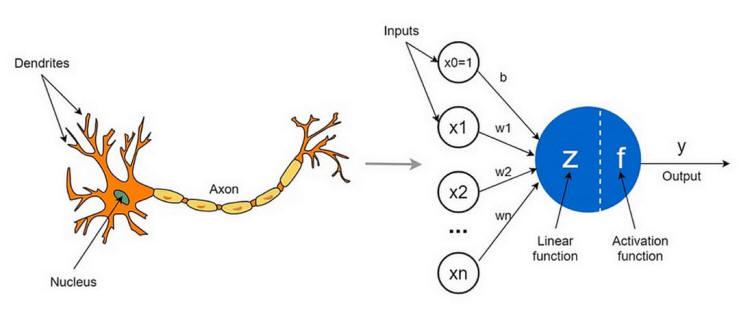

The first artificial neural network, the Perceptron, was created by Frank Rosenblatt in 1958. Inspired by the functioning of biological neurons, the model was capable of performing simple tasks such as binary classification.

In the following decades, especially since the 1970s and 1980s, researchers began to interconnect several "neurons" in multiple layers.

In 1986, Rumelhart, Hinton and Williams proposed the error backpropagation algorithm, which made it possible to train neural networks by adjusting the weights of each connection based on the errors propagated from the outputs to the internal layers.

With the advance of computing power and the mass availability of data, the 2000s marked the emergence of deep neural networks.

A turning point came in 2012, when AlexNet, a deep convolutional network, won the ImageNet competition by a wide margin.

This victory demonstrated the potential of deep

learning and marked the beginning of the modern era of artificial

intelligence.

Both the biological neuron and the Perceptron receive multiple input signals, process them and generate a single output. In the biological neuron, the signals arrive via the dendrites, are integrated into the cell body and, if they exceed a threshold, the neuron fires an action potential via the axon. In the Perceptron, the inputs are numerical values that are multiplied by weights, added together and passed through an activation function that decides whether there will be an output or not.

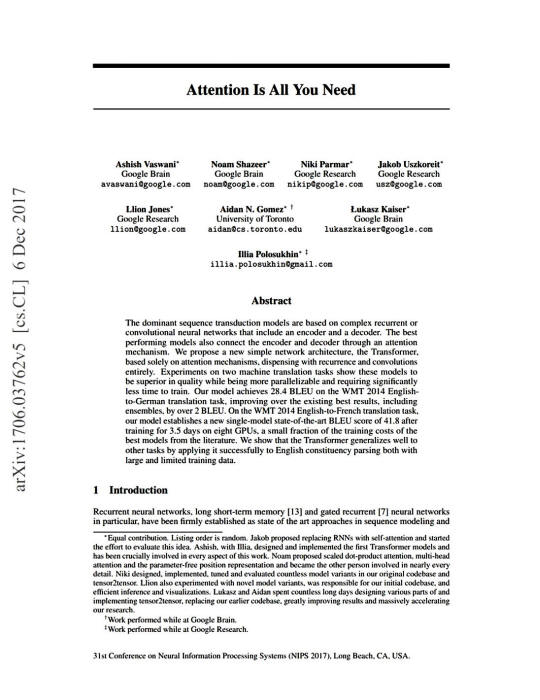

It introduced an innovative neural network architecture:

Based on the attention mechanism, more specifically self-attention, this model allowed simultaneous processing of entire input sequences, capturing relationships between words regardless of the distance between them.

The Transformer architecture revolutionised natural language processing and became the basis for more advanced language models.

From this, OpenAI developed the GPT (Generative Pre-Trained Transformer), a model trained on large amounts of text to learn linguistic patterns and predict the next word in a sequence.

This training is self-supervised, without the need to manually label the data.

The result of this process is ChatGPT, a version

of GPT adapted and optimized for dialogue with users, capable of

answering questions, assisting with tasks and maintaining

conversations in a fluid and understandable way.

The article "Attention is All You Need" introduced the concept behind the Transformers, which is at the origin from ChatGPT and other language models.

They are creations that would not have been

possible without the hard work, discoveries and mistakes of

thousands of researchers over the last few decades, often unknown to

the general public, but fundamental to the advancement of

technology.

Often, however, we remember only those who stood out for their discoveries, forgetting the vast army of thinkers and researchers who paved the way before them.

Of course, we should celebrate the great names in science and philosophy because their work was groundbreaking and created new fields of research.

But we must not neglect those whose contributions enabled these great thinkers to see further.

Celebrating only these few geniuses has affected scientific development and created a gap between scientific, technological and philosophical knowledge and the general public.

The idea of the "lone genius" suggests that in order to participate in the construction of knowledge, it is necessary to possess an early genius, and that only a fortunate few have the opportunity to follow this path.

This idea distances science, technology and

philosophy from society at large.

But few people know about,

Al-Haytham pioneered the use of the scientific method, in which theories are tested through controlled experiments - a fundamental concept of all modern science.

Nettie Stevens made a major contribution to biology by discovering that the biological sex of organisms is determined by the sex chromosomes, specifically the X and Y chromosomes.

Alfred Russel Wallace independently reached similar conclusions to Darwin on the theory of evolution, demonstrating how great discoveries depend not only on individual genius but also on historical context and the accumulation of collective knowledge.

Researchers at Merton College, Oxford, led by Thomas Bradwardine, played a crucial role in the development of medieval physics, particularly in the 14th century.

They pioneered the application of mathematics to physics, laying the foundations for the quantitative analysis of natural phenomena.

There are many other anonymous thinkers, rarely

or never mentioned in history books, who made fundamental

contributions to many fields of knowledge.

Just as we don't need to play like Pelé or Maradona to enjoy or practice football, we don't need to be like Einstein, Kant or Jobs to contribute to the development of science, philosophy or technology.

All it takes is curiosity and a desire to learn...

Today, with access to the Internet, bookshops and universities, a vast world of knowledge is at our fingertips. We are all capable of learning and creating new ideas because we stand on the shoulders of giants...

So let's use this advantage to see further.

|