|

Nottingham Trent

University

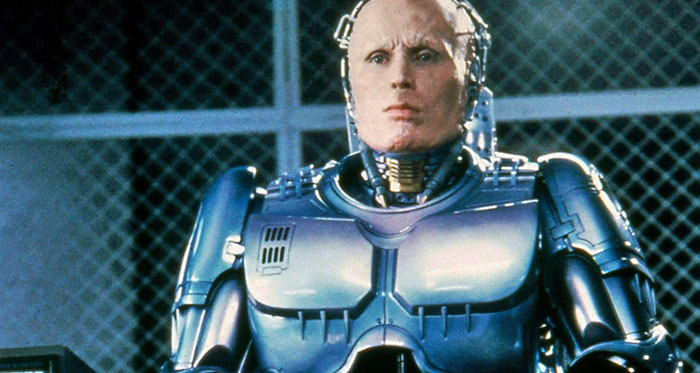

He has a robotic body and a full brain-computer interface that allows him to control his movements with his mind.

He can access online information such as suspects' faces, uses artificial intelligence (A.I.) to help detect threats, and his human memories have been integrated with those from a machine.

It is remarkable to think that the movie's key mechanical robotic technologies have almost now been accomplished by the likes of Boston Dynamics' running, jumping Atlas and Kawasaki's new four-legged Corleo.

Similarly we are seeing robotic exoskeletons that enable paralyzed patients to do things like walking and climbing stairs by responding to their gestures.

Developers have lagged behind when it comes to building an interface in which the brain's electrical pulses can communicate with an external device.

This too is changing, however.

In the latest breakthrough, a research team based at the University of California has unveiled a brain implant that enabled a woman with paralysis to livestream her thoughts via AI into a synthetic voice with just a three-second delay.

The concept of an interface between neurons and machines goes back much further than RoboCop.

In the 18th century, an Italian physician named Luigi Galvani discovered that when electricity is passed through certain nerves in a frog's leg, it would twitch.

The interface fr-OG. Zmrzlnr

This paved the way for the whole study of electrophysiology, which looks at how electrical signals affect organisms.

The initial modern research on brain-computer interfaces started in the late 1960s, with the American neuroscientist Eberhard Fetz hooking up monkeys' brains to electrodes and showing that they could move a meter needle.

Yet if this demonstrated some exciting potential, the human brain proved too complex for this field to advance quickly.

In other words, there's a great deal to figure out.

A tough nut to crack. Jolygon

Much of the recent progress has been based on advances in our ability to map the brain, identifying the various regions and their activities.

A range of technologies can produce insightful images of the brain, including, ...while others monitor certain kinds of activity, including,

These techniques have helped researchers to build some incredible devices, including wheelchairs and prosthetics that can be controlled by the mind.

But whereas these are typically controlled with an external interface like an EEG headset, chip implants are very much the new frontier.

They have been enabled by advances in AI chips and micro electrodes, as well as the deep learning neural networks that power today's AI technology.

This allows for faster data analysis and pattern recognition, which together with the more precise brain signals that can be acquired using implants, have made it possible to create applications that run virtually in real time.

For instance, the new University of California implant relies on ECoG, a technique developed in the early 2000s that captures patterns directly from a thin sheet of electrodes placed directly on the cortical surface of someone's brain.

In their case, the complex patterns picked up by the implant of 253 high-density electrodes are processed using deep learning to produce a matrix of data from which it's possible to decode whatever words the user is thinking.

This improves on previous models that could only create synthetic speech after the user had finished a sentence.

However, it's also worth emphasizing that deep learning neural networks are enabling more sophisticated devices that rely on other forms of brain monitoring.

Our research team at Nottingham Trent University has developed an affordable brainwave reader using off-the-shelf parts that enables patients who are suffering from conditions like,

...to be able to answer "yes" or "no" to questions.

There's also the potential to control a computer mouse using the same technology.

Dr Ahmet Omurtag demonstrating the NTU device, alongside project lead Professor Amin Al-Habaibeh and their colleague Sharmila Majumdar. Author provided

The future

The progress in, ...that enabled these developments is expected to continue in the coming years, which should mean that brain-computer interfaces keep improving.

In the next ten years, we can expect more technologies that provide disabled people with independence by helping them to move and communicate more easily.

This entails improved versions of the technologies that are already emerging, including,

In all cases, it will be a question of balancing our increasing ability to interpret high-quality brain data with invasiveness, safety and costs.

It is still more in the medium to long term that I would expect to see many of the capabilities of a RoboCop, including planted memories and built-in trained skills supported with internet connectivity.

We can also expect to see high-speed communication between people via "brain Bluetooth".

It should be similarly possible to create a Six Million Dollar Man, with enhanced vision, hearing and strength, by implanting the right sensors and linking the right components to convert neuron signals into action (actuators).

No doubt applications will also emerge as our understanding of brain functionality increases that haven't been thought of yet.

Clearly, it will soon become impossible to keep deferring ethical considerations.

With every step forward, questions like these become ever more pressing.

It's time to start thinking about to what extent we want to integrate these technologies into society, the sooner the better.

|