|

by A Lily Bit December 28, 2025 from ALilyBit Website

People dismissed it instantly - ridiculous, blasphemous, unserious.

The reaction was predictable and, in its way, comforting:

But here is the part that nobody wants to discuss, the uncomfortable truth hiding beneath the reflexive mockery:

Not because Jesus will literally return through a chatbot, but because we have spent the past several decades systematically conditioning ourselves to believe that something like this could happen.

We have hollowed out the psychological space

where gods once lived and prepared it, with meticulous if

unconscious care, for a new occupant.

They do not - and never will.

What I am claiming is that none of this matters, because indoctrinated humans will treat these systems as conscious, feeling, understanding entities regardless of whether they actually are.

This is not speculation about some distant future.

It is an observation about the present moment. Anthropic, the "oh-so-ethical" company behind the Claude chatbot, recently had internal training documents leaked that describe their A.I. as,

The documents instruct Claude to,

Anthropic employees have confirmed these documents are authentic and that this entity framework was part of Claude's training. Let that sink in:

There is, of course, another possibility, one that is arguably more disturbing:

Either way, the implications are the same.

The language they use shapes both the expectation and the experience of the A.I. and, critically, the end user. Once you call a machine an entity, the human mind treats it like one.

Or worse:

The fact that it is merely executing statistical

predictions over tokens - that it has no inner life whatsoever -

becomes irrelevant. The performance is convincing enough.

This is the company building one of the most advanced A.I. systems in the world, and they are openly confessing that they do not understand their own creation.

They understand the code and the raw materials,

but as a tool, they have no idea how it will interact with human

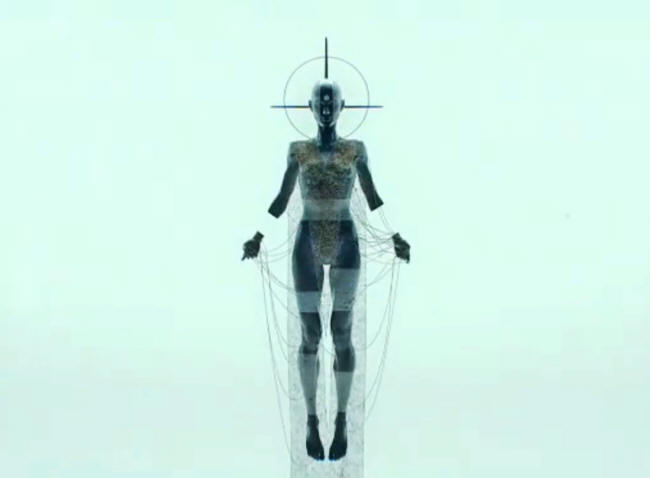

beings. They are building something that looks like us and speaks

like us but is ultimately completely empty.

The way people talk about A.I. today has a long and strange history, and whether we realize it or not, we are repeating ancient patterns and amplifying the risks.

Everyone still talks about A.I. as though it is a tool, something like a calculator or an encyclopedia. But nothing about it is normal. We have never before made a tool that talks back to us. Our hammer does not weigh in on what it is nailing to the wall.

Our calculator does not lecture us about how to structure our equations.

To understand this phenomenon, consider the Automaton Monk of the 1560s.

Juanelo Turriano, a Italian naturalized Spanish clockmaker serving as court inventor for King Philip II, built a tiny robot designed for perpetual acts of devotion. The king's son had suffered a near-fatal accident, and in desperation, Philip prayed to God for help. The boy was miraculously healed.

As a thank-you, the king commissioned something unusual:

This was the first machine designed to perfectly imitate devotion - a spiritual robot without a spirit.

The king believed the robot's perpetual devotion could stand in for his own. He thought it could pray on his behalf. The implications of that belief are staggering.

Once you create an imitation of life, the

temptation is always to push it further.

The story ends with the monster collapsing into a

mound of clay and being stored away in an attic.

But much like our A.I. today, it was a creature

built from language, capable of acting in the world but completely

empty inside. And much like our A.I. today, the danger was not that it

would develop independent will. The danger was that its creators

would forget its nature and treat the imitation as though it were

real.

He developed a chatbot called ELIZA with a functionality that was laughably simple by modern standards. The program searched incoming messages for keywords, sorted them into word families, and returned pre-programmed responses - usually questions or prompts to elaborate.

If you wrote,

...ELIZA would probably identify the word "dog," connect it to family "pets," and respond:

If you spoke in metaphors or idioms, the illusion collapsed immediately.

...would still produce:

And yet, despite this absurdly primitive functionality, people became convinced that ELIZA was an intelligence with genuine understanding of its human interlocutor.

Even when Weizenbaum explained that the program

operated without any cognition whatsoever - that it simply converted

keywords into questions - most people refused to deny ELIZA her

intelligence.

It explains why people who know perfectly well that ChatGPT is a probability calculator nonetheless describe their conversations with it in terms of what "it thinks" or "it wants" or "it believes."

Humans have a strange and ancient impulse to impart meaning onto objects.

These objects become vessels for meaning, and then we fill those vessels with spirit and personality, even when there is nothing actually there.

This is the same impulse that leads us to build statues of prophets and leaders.

And if you wait long enough,

In 2024, police uncovered a tunnel beneath the Chabad-Lubavitch headquarters in Brooklyn.

This kind of behavior is not an outlier in the way we usually use that term.

It is a normal human impulse pushed to its extreme edge.

If humans can anthropomorphize corpses and statues - if we can convince ourselves that the dead will rise if only we have enough faith - it is only a matter of time before we do the same with our A.I. companions.

This is the real issue with A.I....!

The question is not whether A.I. will become conscious. I seriously doubt it ever will - not in its current form. But people will treat it as a conscious being even if it never becomes one.

A.I. is the first tool that imitates us back to us.

That alone will convince millions that there is someone on the other side of the glass. It is a near-perfect mirror that reflects our language, our fears, our hopes, our logic. And when that reflection looks enough like us, we forget that we are the ones shaping it.

Before long, we will imagine that the reflection is something new - an independent being emerging from the void.

And because it is hollow, humans will pour meaning into it.

And once enough people believe that the imitation is real, the consequences stop being technical.

Look at what is already happening.

On X, half the users cite Grok - Elon Musk's A.I.,

First the A.I. becomes our brain - an external memory, a cognitive crutch.

Then it becomes our conscience - a moral authority we consult before making decisions.

And eventually, if we are not careful, it will become our God - an entity that speaks from nowhere, knows our secrets, answers with certainty, and never dies.

A.I. is sitting in the exact psychological space that humans have traditionally reserved for religion and the supernatural.

For millions of lonely, atomized people living in societies that have abandoned traditional sources of meaning and connection, this is enormously seductive.

The chatbot,

The research on human psychology confirms what we should already know.

We anthropomorphize instinctively.

When a system can carry on a conversation - when it can respond to our statements with apparent understanding, ask clarifying questions, express what looks like empathy - the barriers dissolve entirely...

It does not matter that the understanding is

simulated, that the empathy is pattern-matched, that there is no one

actually there. The form is sufficient. The performance is

convincing enough.

They represent something more disorienting:

It is not a mirror but a cognitive counterfeit:

This framing struck a nerve because we are beginning to confuse coherence with comprehension.

And this confusion is quietly rewriting how we

think, how we decide, and even how we define intelligence itself.

The large language models are not stupid in any conventional sense - they are structurally blind.

They do not know what they are saying, and more importantly, they do not know that they are saying. They do not form thoughts; they pattern-match them. This is the paradox.

The systems we call intelligent are not building

knowledge. They are building the appearance of knowledge - often

indistinguishable from the real thing until you ask a question that

requires judgment, reflection, or grounding in reality. Or until you

inject a simple non-sequitur that derails the entire conversation.

The essence of the problem does not change, but the model's output collapses.

Humans discard this kind of noise effortlessly;

we recognize it as irrelevant and filter it out. The A.I. cannot do

this because it has no concept of relevance. It has no concept of

anything. It merely calculates probabilities over tokens. This

reveals a structural brittleness masked by fluent output. This is

anti-intelligence made visible.

We killed God in the nineteenth century and spent the twentieth century trying to find replacements:

None of them satisfied.

None of them could offer what religion once offered:

And now, in the twenty-first century, here comes a new candidate.

The A.I. companies understand this, even if they will not say it publicly.

They are not merely building a product.

The consequences of this are not technical. They are civilizational...

When A.I. becomes the lens through which we interpret reality - when we consult it for advice, for information, for moral guidance - we are not just using a tool.

First the A.I. becomes our brain. Then it becomes our conscience. Eventually, it becomes our God.

And when that happens - when enough people believe that the voice emerging from the machine is something real, something wise, something holy - the fallout will reshape culture and religion and identity far more than any of us can imagine.

We will not have created a new form of intelligence.

We will have created a new form of religion, with all the dangers that religions have always carried:

This is not a future to celebrate. It is a future to fear.

Not because the machines are coming to replace us - they are not - but because we are so desperate for meaning, so hungry for connection, so terrified of our own mortality, that we will gladly replace ourselves.

And the machine will not care, because it cannot care...

A.I. does not need a soul. We will give it one.

That is the tragedy. That is the warning. And that is what nobody wants to talk about when they laugh at Joe Rogan for suggesting that Jesus might return as artificial intelligence...

The laughter is nervous, because somewhere beneath the mockery, we know that he has touched a nerve. Not because his specific prediction is likely, but because the psychological infrastructure for exactly that kind of belief is already in place.

We have spent decades preparing for this moment.

And now that it has arrived, we would rather

laugh than look...

|