|

by Evan Gorelick July 21, 2025 from NYTimes Website recovered through Archive Website

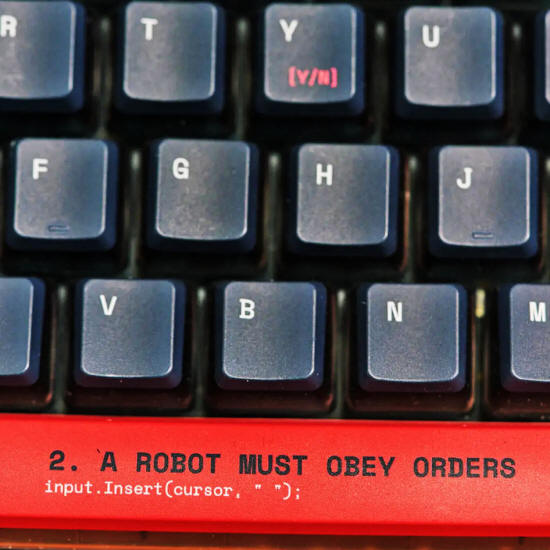

bearing one of Isaac Asimov's

("2. A Robot Must Obey Orders"). for The New York Times

Artificial intelligence isn't just for drafting essays and searching the web. It's also a weapon.

And on the Internet, both the good guys and the bad guys are already using it.

Cybersecurity used to be slow and laborious.

But now, that cat-and-mouse game moves at the speed of A.I.

And the stakes couldn't be higher:

That's more than the annual economic output of China.

Malicious emails used to be riddled with typos and errors, so spam filters could spot and snag them.

That strategy doesn't work anymore.

Since ChatGPT launched in November 2022, phishing attacks have increased more than fortyfold.

Because commercial chatbots have guardrails to prevent misuse, unscrupulous developers built spinoffs for cybercrime.

But even the mainstream models - ChatGPT, Claude, Gemini - are easy to outsmart, said Dennis Xu, a cybersecurity analyst at Gartner, a research and business advisory firm.

Google, which makes Gemini, said criminals (often from Iran, China, Russia and North Korea) used its chatbots to scope out victims, create malware and execute attacks.

OpenAI, which makes ChatGPT, said criminals used its chatbots to generate fake personas, spread propaganda and write scams.

Here's something odd:

Sandra Joyce, who leads the Google Threat Intelligence Group, told me she hadn't seen any "game-changing incident where A.I. did something humans couldn't do."

But cybercrime is a numbers game, and A.I. makes scaling easy.

Ameca, a humanoid robot created for realistic interactions that uses ChatGPT. Credit: Loren Elliott for The New York Times

Walk into any big cybersecurity conference, and virtually every vendor is pitching a new A.I. product. Algorithms analyze millions of network events per second; they catch bogus users and security breaches that take people weeks to spot.

Algorithms have been around for decades, but humans still manually check compliance, search for vulnerabilities and patch code.

Now, cyber firms are automating all of it.

Microsoft said that its

Security Copilot bot made engineers 30 percent faster, and

considerably more accurate.

A well-meaning bot may try to block traffic from a specific threat

and instead block an entire country...

|