|

to maintain superiority, but [...] we are not the pinnacle of creation," Harvard Professor says.

That's why a research team from Princeton

University and the Indian Institute of Technology decided to hand

the job over to artificial intelligence.

Even stranger was how efficient the chips were -

and how little their human progenitors understood them.

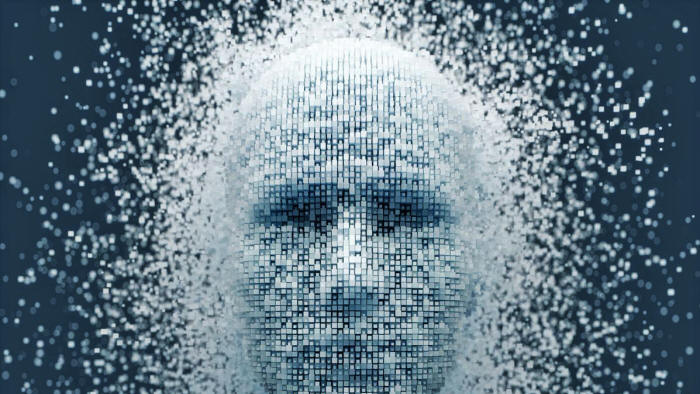

In a blog post (Viewing AI as Alien Intelligence), Loeb ponders how advanced the artificial intelligence of hypothetical alien civilizations could have possibly grown - especially civilizations that might have already been around for billions of years before anything vaguely humanoid appeared in the cosmos.

Humans only think themselves superior because we haven't yet made contact with any type of extraterrestrial intelligence, Loeb says, and he thinks,

And that could make us question our own consciousness.

"If some creature somewhere else has developed artificial intelligence to improve itself, you'll have a machine not only smarter than all humans, but all aliens too."

Loeb thinks that A.I. will evolve so fast - especially once machines invent even smarter machines that invent even smarter machines - that the A.I.-produced computer chips of today will look almost primitive in the future.

He is not the only one to think A.I. will "Darwinize" itself...

Seth Shostak, Ph.D., senior astronomer of the SETI Institute, has parallel views.

Shostak thinks we are essentially inventing the machines that will be our successors, and this has probably happened many times over with technology engineered by alien civilizations far older than humanity.

When you have machines inventing machines inventing machines, evolution happens at warp speed compared to biological evolution.

We are still at about the same level of intelligence as our hominid ancestors who hunted mammoths a million years ago. The only things we have to show for it are advanced technological developments born from a brain made of nerves, blood, and spongy gray matter.

The silicon in artificial brains could easily outperform our own neural impulses, and they might not only eclipse our species.

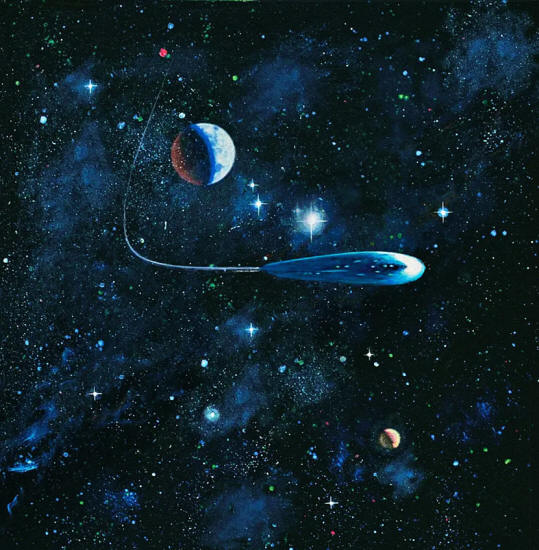

Both Shostak and Loeb agree that our first confirmed alien encounter is far more likely to be with alien A.I. than the aliens that invented it, and that likewise, humans will (and should) use A.I. to travel to other planets by proxy.

Machine intelligence does not suffer health

issues from microgravity, need nutrients, or grow lonely.

Autonomous spacecraft that think with A.I. and know exactly where to go, what to do, and what to beam back to Houston are purpose-built for this.

It would also be impossible to expect humans to trek much further than that.

Our rockets are actually not that impressive even if they can zoom 7–8 miles per second, according to Shostak. Mars is a seven- to eight-month flight away.

Going through that odyssey could already ravage

the human body in ways we might not even be aware of yet, but

biological pitfalls aside, we don't live nearly long enough to strap

in for the 83,500 years it would take to get to our closest star,

Proxima Centauri. If we can figure out how to prevent these machines from breaking down and keep them generating their own power, their lifetimes could be infinite.

We won't even need to put bootprints on other planets to encounter alien life.

Shostak thinks both we and any other (again, hypothetical) intelligent life forms out there can avoid an onslaught of cosmic rays in a spaceship by letting the A.I. make the journey.

If we send an A.I. probe of our own to a planet fathomless light years away, it is possible that the beings it communicates with will have thought "they were the only intelligent life out there" up until that point.

They might experience the same shock Loeb thinks

we will.

|