|

by Colin Allen

A robot walks into a bar and says,

A bad joke, indeed.

But even less funny if the robot says,

The fictional theme of robots turning against humans is older than the word itself, which first appeared in the title of Karel Čapek's 1920 play about artificial factory workers rising against their human overlords.

Just 22 years later, Isaac Asimov invented the "Three Laws of Robotics" to serve as a hierarchical ethical code for the robots in his stories:

From the first story in which the laws appeared, Asimov explored their inherent contradictions.

Great fiction, but

unworkable theory...

with minimal human oversight

in

the same physical spaces as we do...

Some technologists enthusiastically extrapolate from the observation that computing power doubles every 18 months to predict an imminent "technological singularity" in which a threshold for machines of superhuman intelligence will be suddenly surpassed.

Many Singularitarians assume a lot, not the least of which is that intelligence is fundamentally a computational process.

The techno-optimists among them also believe that

such machines will be essentially friendly to human beings. I am

skeptical about

the Singularity, and even if "artificial

intelligence" is not an oxymoron, "friendly A.I." will require

considerable scientific progress on a number of fronts.

These new approaches analyze and exploit the complex causal structure of physically embodied and environmentally embedded systems, at every level, from molecular to social.

They demonstrate the inadequacy of high

But despite undermining the idea that the mind is fundamentally a digital computer, these approaches have improved our ability to use computers for more and more robust simulations of intelligent agents - simulations that will increasingly control machines occupying our cognitive niche.

If you don't believe me, ask

Siri...

Machines, they insist, do only what they are told to do.

A bar-robbing robot would have to be instructed or constructed to do exactly that.

People are morally good only insofar as they must overcome the urge to do what is bad. We can be moral, they say, because we are free to choose our own paths.

Fully human-level moral agency, and all the responsibilities that come with it, requires developments in artificial intelligence or artificial life that remain, for now, in the domain of science fiction.

And yet…

Machines are increasingly operating with minimal human oversight in the same physical spaces as we do. Entrepreneurs are actively developing robots for home care of the elderly.

Robotic vacuum cleaners and lawn mowers are already mass market items. Self-driving cars are not far behind.

Mercedes is equipping its 2013 model S-Class cars with a system that can drive autonomously through city traffic at speeds up to 25 m.p.h.

Google's fleet of autonomous cars has logged about 200,000 miles without incident in California and Nevada, in conditions ranging from surface streets to freeways.

By Google's estimate, the cars have required intervention by a human co-pilot only about once every 1,000 miles and the goal is to reduce this rate to once in 1,000,000 miles.

How long until the next bank robber will have an autonomous getaway vehicle?

This is autonomy in the engineer's sense, not the philosopher's.

We don't want our cars leaving us to join the Peace Corps, nor will they any time soon.

But as the layers of software pile up between us and our machines, they are becoming increasingly independent of our direct control.

In military circles, the phrase "man on the loop" has come to replace "man in the loop," indicating the diminishing role of human overseers in controlling drones and ground-based robots that operate hundreds or thousands of miles from base.

These machines need to adjust to local conditions faster than can be signaled and processed by human tele-operators.

And while no one is yet recommending that decisions to use lethal force should be handed over to software, the Department of Defense is sufficiently committed to the use of autonomous systems that it has sponsored engineers and philosophers to outline prospects (Autonomous Military Robotics - Risk, Ethics, and Design) for ethical governance of battlefield machines.

Joke or not, the topic of machine morality is here to stay.

Even modest amounts of engineered autonomy make it necessary to outline some modest goals for the design of artificial moral agents.

Modest because we are not talking about guidance systems for the Terminator or other technology that does not yet exist.

Necessary, because as machines with limited autonomy operate more often than before in open environments, it becomes increasingly important to design a kind of functional morality that is sensitive to ethically relevant features of those situations.

Modest, again, because this functional morality is not about self-reflective moral agency - what one might call "full" moral agency - but simply about trying to make autonomous agents better at adjusting their actions to human norms.

This can be done with technology that is already available or can be anticipated within the next 5 to 10 years.

The project of designing artificial moral agents provokes a wide variety of negative reactions, including that it is preposterous, horrendous, or trivial.

My co-author Wendell Wallach and I have been accused of being, in our book "Moral Machines" (also HERE), unimaginatively human-centered in our views about morality, of being excessively optimistic about technological solutions, and of putting too much emphasis on engineering the machines themselves rather than looking at the whole context in which machines operate.

In response to the charge of preposterousness, I am willing to double down.

Far from being an exercise in science fiction, serious engagement with the project of designing artificial moral agents has the potential to revolutionize moral philosophy in the same way that philosophers' engagement with science continuously revolutionizes human self-understanding.

New insights can be gained from confronting the question of whether and how a control architecture for robots might utilize (or ignore) general principles recommended by major ethical theories.

Perhaps ethical theory is to moral agents as physics is to outfielders - theoretical knowledge that isn't necessary to play a good game. Such theoretical knowledge may still be useful after the fact to analyze and adjust future performance.

Even if success in building artificial moral agents will be hard to gauge, the effort may help to forestall inflexible, ethically-blind technologies from propagating.

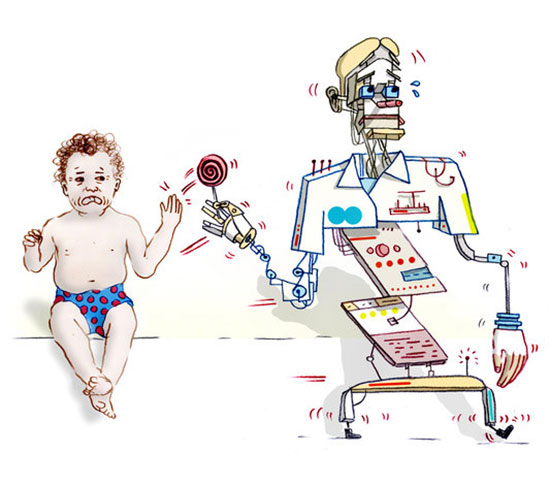

More concretely, if cars are smart enough to navigate through city traffic, they are certainly smart enough to detect how long they have been parked outside a bar (easily accessible through the marriage of G.P.S. and the Internet) and to ask you, the driver, to prove you're not drunk before starting the engine so you can get home.

For the near term (say, 5 to 10 years), a responsible human will still be needed to supervise these "intelligent" cars, so you had better be sober.

Does this really require artificial morality, when one could simply put a breathalyzer between key and ignition?

Such a dumb, inflexible system would have a kind of operational morality in which the engineer has decided that no car should be started by person with a certain blood alcohol level.

But it would be ethically blind - incapable, for instance, of recognizing the difference between, on the one hand, a driver who needs the car simply to get home and, on the other hand, a driver who had a couple of drinks with dinner but needs the car because a 4-year old requiring urgent medical attention is in the back seat.

It is within our current capacities to build machines that are able to determine, based on real-time information about current traffic conditions and access to actuarial tables, how likely it is that this situation might lead to an accident.

Of course, this only defers the ethical question of how to weigh the potential for harm that either option presents, but a well-designed system of human-machine interaction could allow for a manual override to be temporarily logged in a "black-box" similar to those used on airplanes.

In case of an accident this would provide evidence that the person had taken responsibility.

Just as we can envisage machines with increasing degrees of autonomy from human oversight, we can envisage machines whose controls involve increasing degrees of sensitivity to things that matter ethically.

Not perfect machines, to be sure, but better.

...

I am sensitive to the worries, but optimistic enough to think that this kind of techno-pessimism has, over the centuries, been oversold.

Luddites have always come to seem quaint, except when they were dangerous.

The challenge for philosophers and engineers alike is to figure out what should and can reasonably be done in the middle space that contains somewhat autonomous, partly ethically-sensitive machines.

Some may think the exploration of this space is too dangerous to allow.

Prohibitionists may succeed in some areas - robot arms control, anyone...? - but they will not, I believe, be able to contain the spread of increasingly autonomous robots into homes, eldercare, and public spaces, not to mention the virtual spaces in which much software already operates without a human in the loop.

We want machines that do chores and errands without our having to monitor them continuously.

Retailers and banks depend on software controlling all manner of operations, from credit card purchases to inventory control, freeing humans to do other things that we don't yet know how to construct machines to do.

My response is that,

The challenge is to reconcile these two rather different ways of approaching the world, to yield better understanding of how interactions among people and contexts enable us, sometimes, to steer a reasonable course through the competing demands of our moral niche.

The different kinds of rigor provided by philosophers and engineers are both needed to inform the construction of machines that,

|