|

by Brandon Keim

April 12, 2012

from

Wired Website

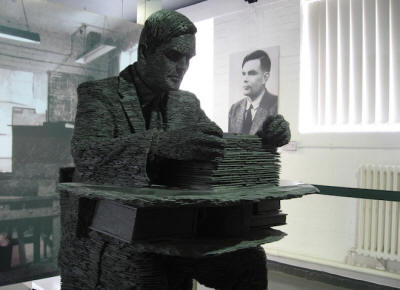

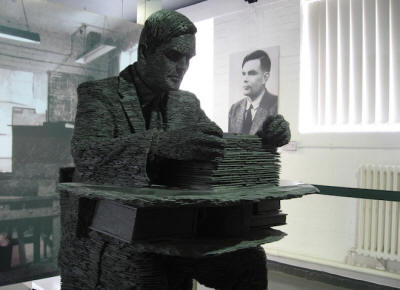

The

Alan Turing memorial at Bletchley

Park,

the site of Turing’s codebreaking accomplishments during World War II.

(Jon

Callas/Flickr)

One hundred years after

Alan Turing was

born, his eponymous test remains an elusive benchmark for artificial

intelligence (AI). Now, for the first time in decades, it’s possible to

imagine a machine making the grade.

Turing was one of the 20th century’s great mathematicians, a

conceptual architect of modern computing whose code-breaking

played a

decisive part in World War II. His test,

described in a seminal

dawn-of-the-computer-age paper, was deceptively simple: If a machine

could pass for human in conversation, the machine could be

considered intelligent.

Artificial intelligences are now ubiquitous, from GPS navigation

systems and Google algorithms to automated customer service and

Apple’s Siri, to say nothing of Deep Blue and Watson - but no

machine has met Turing’s standard.

The quest to do so, however, and the

lines of research inspired by the general challenge of modeling

human thought, have profoundly influenced both computer and

cognitive science.

There is reason to believe that code kernels for the first

Turing-intelligent machine have already been written.

“Two revolutionary advances in

information technology may bring the Turing test out of

retirement,” wrote Robert French, a cognitive scientist at the

French National Center for Scientific Research, in an Apr. 12

Science essay.

“The first is the ready availability

of vast amounts of raw data - from video feeds to complete sound

environments, and from casual conversations to technical

documents on every conceivable subject. The second is the advent

of sophisticated techniques for collecting, organizing, and

processing this rich collection of data.”

“Is it possible to recreate something similar to the

subcognitive low-level association network that we have? That’s

experiencing largely what we’re experiencing? Would that be so

impossible?” French said.

When Turing first proposed the test -

poignantly modeled on a party game in which participants tried to

fool judges about their gender; Turing was cruelly persecuted for

his homosexuality - the idea of “a subcognitive low-level

association network” didn’t exist.

The idea of replicating human thought,

however, seemed quite possible, even relatively easy.

The human mind was thought to be logical. Computers run logical

commands. Therefore our brains should be computable. Computer

scientists thought that within a decade, maybe two, a person engaged

in dialogue with two hidden conversants, one computer and one human,

would be unable to reliably tell them apart.

That simplistic idea proved ill-founded.

Cognition is far more

complicated than mid-20th century computer scientists or

psychologists had imagined, and logic was woefully insufficient in

describing our thoughts.

Appearing human turned out to be an

insurmountably difficult task, drawing on previously unappreciated

human abilities to integrate disparate pieces of information in a

fast-changing environment.

“Symbolic logic by itself is too

brittle to account for uncertainty,” said Noah Goodman, a

computer scientist at Stanford University who models

intelligence in machines.

Nevertheless,

“the failure of what we now call

old-fashioned AI was very instructive. It led to changes in how

we think about the human mind. Many of the most important things

that have happened in cognitive science” emerged from these

struggles, he said.

By the mid-1980s, the Turing test had

been largely abandoned as a research goal (though it survives today

in

the annual Loebner prize for realistic chatbots, and momentarily

realistic advertising bots are a regular feature of online life.)

However, it helped spawn the two

dominant themes of

modern cognition and artificial intelligence:

calculating probabilities and producing complex behavior from the

interaction of many small, simple processes.

Unlike the so-called brute force computational approaches seen in

programs like

Deep Blue, the computer that famously defeated chess

champion Garry Kasparov, these are considered accurate reflections

of at least some of what occurs in human thought.

As of now, so-called probabilistic and connectionist approaches

inform many real-world artificial intelligences: autonomous cars,

Google searches, automated language translation,

the IBM-developed Watson program that so thoroughly dominated at Jeopardy.

They remain limited in scope.

“If you say, ‘Watson, make me

dinner,’ or ‘Watson, write a sonnet,’ it explodes,” said Goodman

- but raise the alluring possibility of applying them to

unprecedentedly large, detailed datasets.

“Suppose, for a moment, that all the

words you have ever spoken, heard, written, or read, as well as

all the visual scenes and all the sounds you have ever

experienced, were recorded and accessible, along with similar

data for hundreds of thousands, even millions, of other people.

Ultimately, tactile, and olfactory sensors could also be added

to complete this record of sensory experience over time,” wrote

French in Science, with a nod to MIT researcher Deb Roy’s

recordings of 200,000 hours of his infant son’s waking

development.

He continued,

“Assume also that the software

exists to catalog, analyze, correlate, and cross-link everything

in this sea of data. These data and the capacity to analyze them

appropriately could allow a machine to answer heretofore

computer-unanswerable questions” and even pass a Turing test.

Artificial intelligence expert Satinder

Singh of the University of Michigan was cautiously optimistic about

the prospects offered by data.

“Are large volumes of data going to

be the source of building a flexibly competent intelligence?

Maybe they will be,” he said.

“But all kinds of questions that haven’t been studied much

become important at this point. What is useful to remember? What

is useful to predict? If you put a kid in a room, and let him

wander without any task, why does he do what he does?” Singh

continued. “All these sorts of questions become really

interesting.

“In order to be broadly and flexibly competent, one needs to

have motivations and curiosities and drives, and figure out what

is important,” he said.

“These are huge challenges.”

IBM’s Watson

computer defeating Ken Jennings, the highest-ranking human

Jeopardy! player. (IBM)

Video: Two chatbots

hold a conversation in the Cornell Creative Machines Lab.

(Cornell

University/YouTube)

Should a machine pass

the Turing test,

it would fulfill a human desire that predates the computer age,

dating back to Mary Shelley’s Frankenstein or even

the golems of

Middle Age folklore, said computer scientist Carlos Gershenson of

the National Autonomous University of Mexico.

But it won’t answer a more fundamental

question.

“It will be difficult to do - but

what is the purpose?” he said.

Citation: “Dusting Off the Turing Test.”

By Robert M. French. Science, Vol. 336 No. 6088, April 13, 2012.

“Beyond Turing’s Machines.”

By Andrew Hodges. Science, Vol. 336 No.

6088, April 13, 2012.

|