|

by Vernor Vinge

2007

from

Kurzweilai Website

|

It's 2045 and nerds in

old-folks homes are wandering around, scratching their heads, and

asking plaintively, "But ... but, where's the Singularity?"

Science fiction writer Vernor

Vinge - who originated the concept of the technological Singularity

- doesn't think that will happen, but he explores three alternate

scenarios, along with our "best hope for long-term survival" -

self-sufficient, off-Earth settlements.

Originally presented at Long Now Foundation

Seminars About Long Term Thinking, February 15, 2007.

Published with permission on KurzweilAI.net March 14, 2007.

|

Just for the record

Given the title of my talk, I should define and briefly discuss what

I mean by the Technological Singularity:

It seems plausible that with

technology we can, in the fairly near future, create (or become)

creatures who surpass humans in every intellectual and creative

dimension. Events beyond this event - call it the Technological

Singularity - are as unimaginable to us as opera is to a flatworm.

The preceding sentence, almost by

definition, makes long-term thinking an impractical thing in a

Singularity future.

However, maybe the Singularity won't happen, in which case planning

beyond the next fifty years could have great practical importance.

In any case, a good science-fiction writer (or a good scenario

planner) should always be considering alternative outcomes.

I should add that the alternatives I discuss tonight also assume

that faster-than-light space travel is never invented! Important

note for those surfing this talk out of context. I still regard the

Singularity as the most likely non-catastrophic outcome for our near

future.

There are many plausible catastrophic scenarios (see Martin Rees's

Our Final Hour), but tonight I'll

try to look at non-singular futures that might still be survivable.

The Age of Failed

Dreams

A plausible explanation for "Singularity failure" is that we never

figure out how to "do the software" (or "find the soul in the

hardware", if you're more mystically inclined).

Here are some possible symptoms:

-

Software creation continues as

the province of software engineering.

-

Software projects that endeavor

to exploit increasing hardware power fail in more and more

spectacular ways.

-

Project failures so deep

that no amount of money can disguise the failure;

walking away from the project is the only option.

-

Spectacular failures in

large, total automation projects. (Human flight

controllers occasionally run aircraft into each other; a

bug in a fully automatic system could bring a dozen

aircraft to the same point in space and time.)

-

Such failures lead to reduced

demand for more advanced hardware, which no one can properly

exploit - causing manufacturers to back off in their

improvement schedules. In effect, Moore's Law fails - even

though physical barriers to further improvement may not be

evident.

-

Eventually, basic research in

related materials science issues stagnates, in part for lack

of new generations of computing systems to support that

research.

-

Hardware improvements in simple

and highly regular structures (such as data storage) are the

last to fall victim to stagnation. In the long term, we have

some extraordinarily good audio-visual entertainment

products (but nothing transcendental) and some very large

data bases (but without software to properly exploit them).

-

So most people are not surprised

when the promise of strong AI is not fulfilled, and other

advances that would depend on something like AI for their

greatest success - things like nanotech general assemblers -

also elude development.

All together, the early years of this

time come to be called the "Age of Failed Dreams."

Broader

characteristics of the early years

-

It's 2040 and nerds in old-folks

homes are wandering around, scratching their heads, and

asking plaintively, "But ... but, where's the Singularity?"

-

Some consequences might seem

comforting:

-

Edelson's Law says: "The

number of important insights that are not being made is

increasing exponentially with time."

I see this caused

by the breakneck acceleration of technological

progress - and the failure of merely human minds to keep

up. If progress slowed, there might be time for us to

begin to catch up (though I suspect that our bioscience

databases would continue to be filled faster than we

could ever analyze).

-

Maybe now there would

finally be time to go back over the last century of

really crummy software and redo things, but this time in

a clean and rational way. (Yeah, right.)

-

On the other hand, humanity's

chances for surviving the century might become more dubious:

-

Environmental and resource

threats would still exist.

-

Warfare threats would still

exist. In the early years of the 21st century, we have

become distracted and (properly!) terrified by nuclear

terrorism. We tend to ignore the narrow passage of

1970-1990, when tens of thousands of nukes might have

been used in a span of days, perhaps without any

conscious political trigger. A return to

MAD is very

plausible, and when stoked by environmental stress, it's

a very plausible civilization killer.

Envisioning

the resulting Long Now possibilities

Suppose humankind survives the 21st century. Coming out of the Age

of Failed Dreams, what would be the prospects for a long human era?

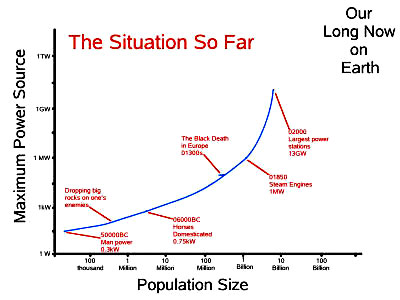

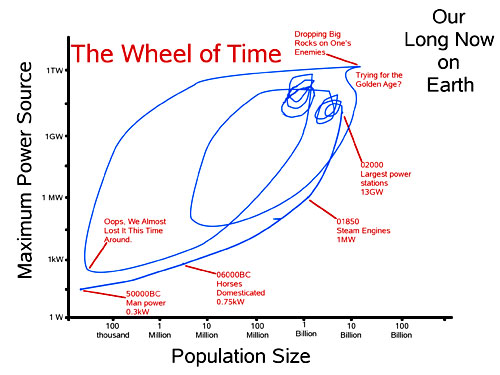

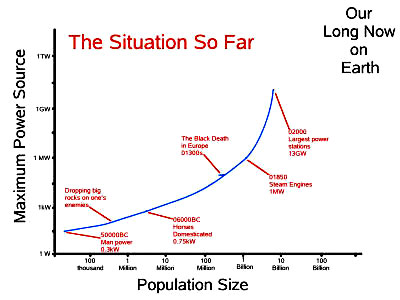

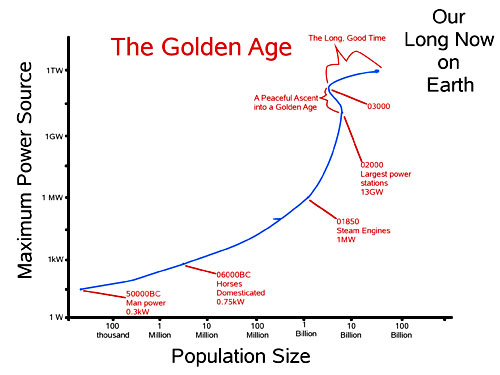

I'd like to illustrate some

possibilities with diagrams that show all of the Long Now - from tens

of thousands of years before our time to tens of thousands of years

after - all at once and without explicit reference to the passage of

time (which seems appropriate for thinking of the Human Era as a

single long now!).

Instead of graphing a variable such as population as a function of

time, I'll graph the relationship of an aspect of technology against

population size.

By way of example, see below image for

our situation so far.

It doesn't look very exciting.

In fact, the most impressive thing is

that in the big picture, we humans seem a steady sort. Even the

Black Death makes barely a nick in our tech/pop progress. Maybe this

reflects how things really are - or maybe we haven't seen the whole

story. (Note that extreme excursions to the right (population) or

upwards (related to destructive potential) would probably be

disastrous for civilization on Earth.)

Without the Singularity, here are three possibilities

(scenarios in their own right):

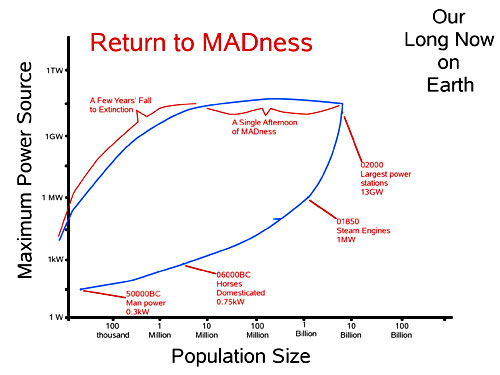

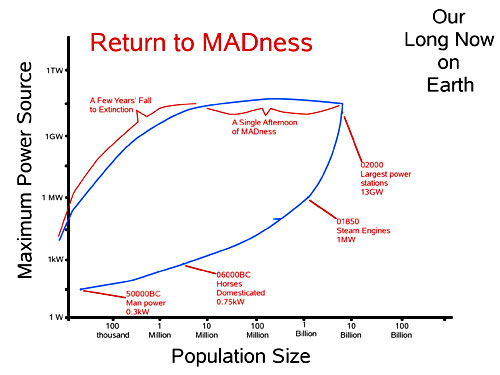

Scenario 1 - A Return to MADness

-

I said I'd try to avoid

existential catastrophes, but I want to emphasize that

they're still out there. Avoiding such should be at the top

of ongoing thinking about the long-term.

-

The "bad afternoon" going back

across the top of the diagram should be very familiar to

those who lived through the era of:

-

Fate of the Earth by

Jonathan Schell

-

TTAPS (a report dubbed "TTAPS"

study, from the initials of the last names of its

authors, R.P. Turco, O.B. Toon, T.P. Ackerman, J.B.

Pollack, and C. Sagan.)

Nuclear Winter claims

-

Like many people, I'm

skeptical about the two preceding references. On the

other hand, there's much uncertainty about the effects

of a maximum nuclear exchange. The subtle logic of MAD

planning constantly raises the threshold of "acceptable

damage", and engages very smart people and enormous

resources in assuring that ever greater levels of

destruction can be attained. I can't think of any other

threat where our genius is so explicitly aimed at our

own destruction.

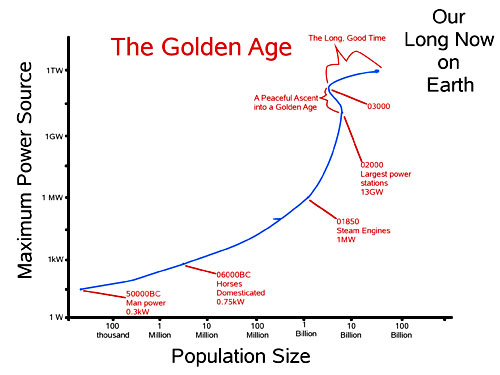

Scenario 2 - The Golden Age

(A scenario to balance the pessimism

of A Return to MADness - above Scenario 1 image)

-

There are trends in our era that

tend to support this optimistic scenario:

-

The plasticity of the human

psyche (on time scales at least as short as one human

generation). When people have hope, information, and

communication, it's amazing how fast they start behaving

with wisdom exceeding the elites.

-

The Internet empowers such

trends, even if we don't accelerate on into the

Singularity. (My most recent book,

Rainbows End, might

be considered an illustration of this (depending on how

one interprets the evidence of incipiently transhuman

players.)

-

This scenario is similar to

Gunther Stent's vision in

The Coming of the Golden Age, a

View of the End of Progress (except that in my version there

would still be thousands of years to clean up after Edelson's law).

-

The decline in population (the

leftward wiggle in the trajectory) is a peaceful, benign

thing, ultimately resulting in a universal high standard of

living.

-

On longest time horizon, there

is some increase in both power and population.

-

This civilization apparently

reaches the long-term conclusion that a large and happy

population is better than a smaller happy population.

The reverse could be argued. Perhaps in the fullness of

time, both possibilities were tried.

-

So what happens at the far

end of this Long Now (20000 years from now, 50000)? Even

without the Singularity, it seems reasonable that at

some point the species would become something greater.

-

A policy suggestion (applicable

to most of these scenarios): [Young] Old People are good for

the future of Humanity! Thus prolongevity research may be

one of the most important undertakings for the long-term

safety of the human race.

-

This suggestion explicitly

rejects the notion that lots of old people would deaden

society. I'm not talking about the moribund old people

that we humans have always known (and been). We have no

idea what young very old people are like, but their

existence might give us something like the advantage the

earliest humans got from the existence of very old tribe

members (age 35 to 65).

-

The Long Now perspective

comes very naturally to someone who expects that not

only his/her g*grandchildren will be around in 500

years - so may be the individual him/herself.

-

And once we get well into

the future, then besides having a long prospective view,

there would be people who have experienced the distant

past.

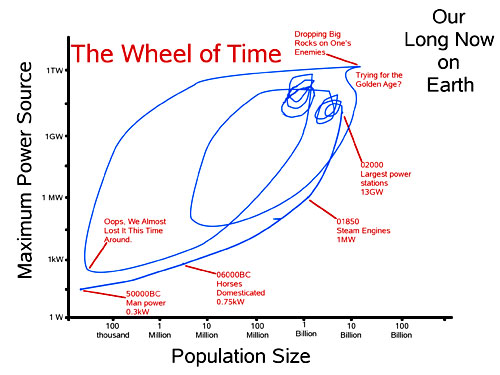

Scenario 3 - The Wheel of Time

I fear this scenario is much more

plausible than The Golden Age (above Scenario 2 image).

The Wheel of Time is based on

fact that Earth and Nature are dynamic and our own technology

can cause terrible destruction. Sooner or later, even with the

best planning, mega-disasters happen, and civilization falls (or

staggers).

Hence, in this diagram we see cycles

of disasters and recovery.

There has been a range of

speculation about such questions (mostly about the first

recovery):

In fact, we know almost nothing

about such cycles - except that the worst could probably kill

everyone on Earth.

How to deal with the

deadliest uncertainties

A frequent catchphrase in this talk has been "Who knows?".

Often this mantra is applied to the most

serious issues we face:

-

How dangerous is MAD, really?

(After all, "it got us through the 20th century alive".)

-

How much of an existential

threat is environmental change?

-

How fast could humanity recover

from major catastrophes?

-

Is full recovery even possible?

-

Which disasters are the most difficult to recover from?

-

How close is technology to

running beyond nation-state MAD and giving irritable

individuals the power to kill us all?

-

What would be the long-term

effect of having lots of young old people?

-

What is the impact of

[your-favorite-scheme-or-peril] on long-term human survival?

We do our best with scenario planning.

But there is another tool, and it is wonderful if you have it: broad

experience.

-

An individual doesn't have to

try out every recreational drug to know what's deadly.

-

An individual has in him/herself

no good way of estimating the risks of different styles of

diet and exercise. Even the individual's parents may not be

much help - but a

Framingham study can provide guidance.

Alas, our range of experience is

perilously narrow, since we have essentially one experiment to

observe.

In the Long Now, can we do better? The

Golden Age scenario would allow serial experimentation with some of

the less deadly imponderables: over a long period of time, there

could be gentle experiments with population size and prolongevity.

(In fact, some of that may be visible in the "wiggle" in my Golden

Age diagram.)

But there's no way we can guarantee we're in The Golden Age

scenario, or have any confidence that our experiments won't destroy

civilization. (Personally, I find The Wheel of Time scenarios much

more plausible than The Golden Age.)

Of course, there is a way to gain experience and at the same time

improve the chances for humanity's survival:

Self-sufficient, off-Earth settlements as humanity's best hope for

long-term survival

This message has been brought back to the attention of futurists,

and by some very impressive people: Hawking, Dyson, and Rees in

particular.

Some or all of these folks have been making this point for many

decades. And of course, such settlements were at the heart of much

of 20th century science-fiction. It is heartwarming to see the

possibility that, in this century, the idea could move back to

center stage.

(Important note for those surfing this talk out of context: I'm not

suggesting space settlement as an alternative to, or evasion of, the

Singularity. Space settlement would probably be important in

Singularity scenarios, too, but embedded in inconceivabilities.)

Some objections and responses:

-

"Chasing after safety in space

would just distract from the life-and-death priority of

cleaning up the mess we have made of Earth." I suspect that

this point of view is beyond logical debate.

-

"Chasing after safety in space

assumes the real estate there is not already in use." True.

The possibility of the Singularity and the question "Are we

alone in the universe?" are two of the most important

practical mysteries that we face.

-

"A real space program would be

too dangerous in the short term." There may be some virtue

in this objection. A real space program means cheap access

to space, which is very close to having a WMD capability. In

the long run, the human race should be much safer, but at

the expense of this hopefully small short-term risk.

-

"There's no other place in the

Solar System to support a human civilization - and the stars

are too far."

-

Asteroid belt civilizations

might have more wealth potential than terrestrial ones.

-

In the Long Now, the stars

are NOT too far, even at relatively low speeds.

Furthermore, interstellar radio networks would be

trivial to maintain (1980s level technology). Over time,

there could be dozens, hundreds, thousands of distinct

human histories exchanging their experience across the

centuries. There really could be Framingham studies of

the deadly uncertainties!

What's a real

space program... and what's not

-

From 1957 to circa 1980 we

humans did some proper pioneering in space. We (I mean

brilliant engineers and scientists and brave explorers)

established a number of near-Earth applications that are so

useful that they can be commercially successful even at

launch costs to Low Earth Orbit (LEO) of $5000 to $10000/kg.

We also undertook a number of human and robotic missions

that resolved our greatest uncertainties about the Solar

System and travel in space.

-

From 1980 till now? Well, launch

to LEO still runs $5000 to $10000/kg. As far as I can tell,

the new

Vision for Space Exploration will maintain these

costs. This approach made some sense in 1970, when we were

just beginning and when initial surveys of the problems and

applications were worth almost any expense.

Now, in the early 21st century,

these launch costs make talk of humans-in-space a doubly

gold-plated sham:

-

First, because of the

pitiful limitations on delivered payloads, except at

prices that are politically impossible (or are deniable

promises about future plans).

-

Second, because with these

launch costs, the payloads must be enormously more

reliable and compact than commercial off-the-shelf

hardware - and therefore enormously expensive in their own

right.

I believe most people have great

sympathy and enthusiasm for humans-in-space. They really "get" the

big picture. Unfortunately, their sympathy and enthusiasm has been

abused.

Humankind's presence in space is essential to long-term human

survival.

That is why I urge that we reject any major humans-in-space

initiative that does not have the prerequisite goal of much cheaper

(at least by a factor of ten) access to space.

Institutional

paths to achieve cheaper access to space

-

There are several space

propulsion methods that look feasible - once the spacecraft is

away from Earth. Such methods could reduce the inner solar

system to the something like the economic distances that

18th century Europeans experienced in exploring Earth.

-

The real bottleneck is hoisting

payloads from the surface of the Earth to orbit. There are a

number of suggested approaches. Which, if any, of them will

pay off? Who knows? On the other hand, this is an

imponderable that that can probably be resolved by:

-

Prizes like the X-prize.

-

Real economic prizes in the

form of promises (from governments and/or the largest

corporations) of the form: "Give us a price to orbit of

$X/kg, and we'll give you Y tonnes of business per year

for Z years.

-

Retargeting

NASA to basic

enabling research, more in the spirit of its

predecessor,

NACA.

-

A military arms race. (Alas,

this may be the most likely eventuality, and it might be

part of a return to MADness. Highly deprecated!)

|