|

from

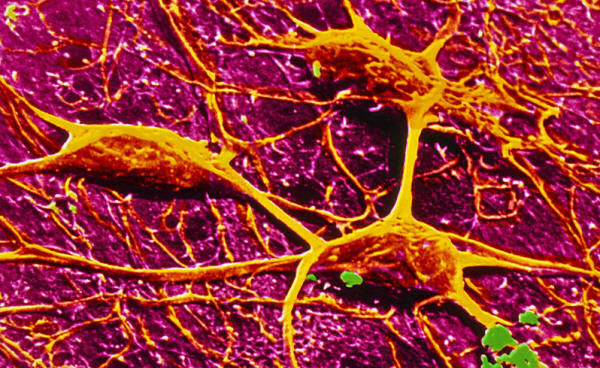

Nature Website and transmit information in the brain. Credit: CNRI/SPL

that mimics neural processing could make artificial intelligence more efficient - and more human.

That achievement (Ultralow Power Artificial Synapses using Nanotextured Magnetic Josephson Junctions), described in Science Advances on 26 January, is a key benchmark in the development of advanced computing devices designed to mimic biological systems.

And it could open the

door to more natural machine-learning software, although many

hurdles remain before it could be used commercially.

But because conventional computer hardware was not designed to run brain-like algorithms, these machine-learning tasks require orders of magnitude more computing power than the human brain does.

NIST is one of a handful of groups trying to develop 'neuromorphic' hardware that mimics the human brain in the hope that it will run brain-like software more efficiently.

In conventional electronic systems, transistors process information at regular intervals and in precise amounts - either 1 or 0 bits.

But neuromorphic devices can accumulate small amounts of information from multiple sources, alter it to produce a different type of signal and fire a burst of electricity only when needed - just as biological neurons do.

As a result, neuromorphic

devices require less energy to run.

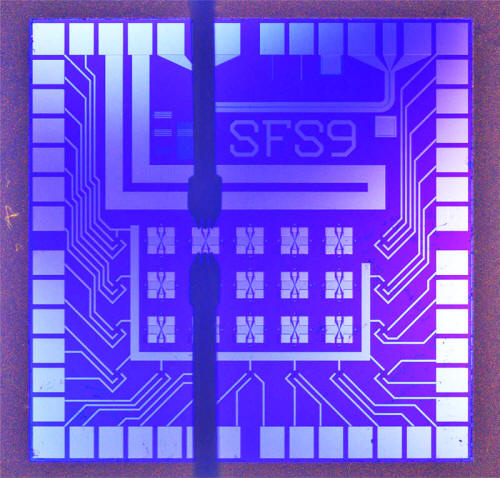

So Michael Schneider's

team created neuron-like electrodes out of

niobium superconductors, which

conduct electricity without resistance. They filled the gaps between

the superconductors with thousands of

nanoclusters of magnetic manganese.

This allows the system to

encode information in both the level of electricity and in the

direction of magnetism, granting it far greater computing power than

other

neuromorphic systems without taking

up additional physical space.

connects with high-speed electrical probes.

NIST

But millions of synapses

would be necessary before a system based on the technology could be

used for complex computing, Schneider says, and it remains to be

seen whether it will be possible to scale it to this level.

Steven Furber, a computer engineer at University of Manchester, UK, who studies neuromorphic computing, says that this might make the chips impractical for use in small devices, although a large data centre might be able to maintain them.

But Schneider says that

cooling the devices requires much less energy than operating a

conventional electronic system with an equivalent amount of

computing power.

But he adds it would be a

long time before the chips could be used for real computing, and

points out that they face stiff competition from the many other

neuromorphic computing devices under development.

For instance, there are

many outstanding questions about how synapses remodel themselves

when forming a memory, making it difficult to recreate the process

in a memory-storing chip.

|