|

by Susanne Posel

from

OccupyCorporatism Website

The Human Rights Watch (HRW) has released a report entitled “Losing Humanity - The Case Against Killer Robots” which warns that autonomous synthetic armed forces lack conscious empathy that human soldiers have and could perform lethal missions without provocation.

Autonomous synthetic robots used as weapons cannot inherently conform to “the requirements of international humanitarian law” as they cannot adequately distinguish,

Using the excuse that these robots would save military lives in combat situations, does not address the fact that they are fully programmable computers lacking compassion for human life - whether it is for the targeted “enemies” or civilians.

The HRW report states:

Indeed, the responsibility factor is questionable on a legal stand-point because who is ultimately responsible for the actions of a synthetic armed force robot?

Would the ultimate charge fall to the:

HRW, a non-governmental organization, has partnered with Harvard Law School International Human Rights Clinic to demand that an international treaty be drawn that would strictly,

The restriction of national governments from developing, producing and using these “weapons” within domestic borders is also being brought to light.

The South Korean government has created the SGR - 1 sentry system that has been deployed in demilitarized zones to identify intruders and fire on them with the assistance of a human operator.

The SGR - 1 is also fully autonomous and can be enabled to carryout missions without the control of a remote operator. The SGR - 1 has an extremely limited ability to gauge complex environments facilitated by war.

Its ability to calculate responses is narrow and therefore it’s “reasoning” and decision-making skills are questionable. This robot cannot distinguish between combat and non-combat which leaves it highly lethal, regardless of its target.

Predicted by an Air Force report entitled, “Technology Horizons” published in 2010,

This experiment monitored the,

This endeavor sought to understand human decision - making systems in order to give the US military industrial complex,

The Department of Defense (DoD) asserted that although current ground - based weapons were being used extensively,

Small autonomous robots, like tiny robot insects are now engaged to spy on targets.

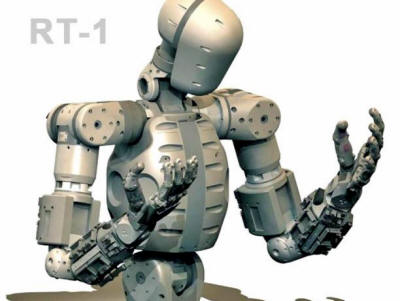

In August, DARPA awarded Boston Dynamics, Inc. a $10.9 million contract to manufacture humanoid robots that are bi-pedal, built like humans and have a sensor head with on-board computing capabilities. Completion of the project is expected for August of 2014.

These robots are being created to assist in excavation and rescue missions, according to DARPA. They could also be employed to evacuation operations during either man-made or natural disasters.

The DARPA’s Autonomous Robotic Manipulation (ARM) program seeks to find ways to utilize different remote robotic manipulation systems that are controlled by humans. This program is divided into 3 aspects:

The Naval Research Laboratory has developed SAFFiR, the Shipboard Autonomous Firefighting Robot. SAFFiR is an autonomous bipedal humanoid robot, based on the CHARLI-L1 robot created at Virginia Tech.

This robot can interact with humans with a comprehensive response system that utilizes language - including slang to make it more familiar. A robot that can hold a conversation and fight fires is quite impressive.

In 2010, DARPA revealed a robot at the Association for Unmanned Vehicle Systems International Conference in Denver that was interactive in a public forum. Participants would write software and have the robot preform specified tasks. The goal of this event was to show that robots were being developed by the US government to preform “dangerous tasks” such as disarming an explosive device thereby reducing “significant human interaction”.

The DARPA Robotics Challenge is putting out the call for a synthetic force that can be designed for autonomous thought; yet mitigate the risk to human life when preforming a rescue mission.

Thanks to the Robotics Challenge autonomous robots will be able to process operator interface, signals, neural signatures and cognitive visual algorithms.

In short, although DARPA cannot utilize precise technologies to allow fully autonomous robots engage in missions independently, the quest toward this goal is quickly being realized.

DARPA claims:

Goose says that the systematic halting of,

It is clear that regardless of the risks, the US military are endeavoring toward a synthetic armed force fighting in the stead of current active duty soldiers.

A force of robotic “peacekeepers” that are programmed to become violent without remorse - will enable the government to organize and act where human law enforcement may hesitate.

|